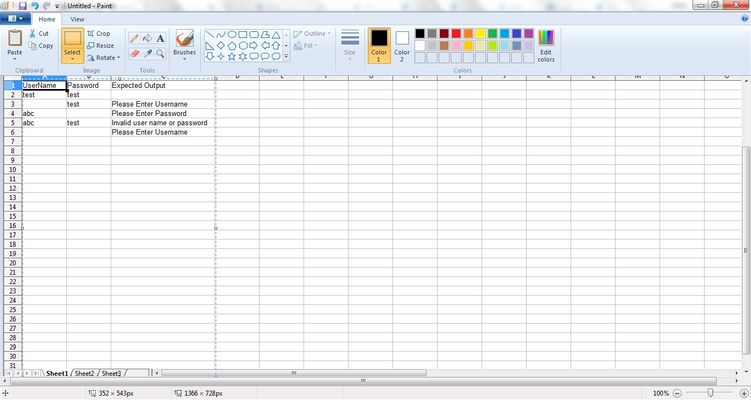

The way I set my input up on excel is:

Run Flag / Result / Function Name / Data 1 / Data 2 / Data 3 / ...... up to as many Data inputs as you want.

Run Flag - indicates whether that line should be run or not.

Result - starts empty. Is populated when the test runs. (The input file becomes the high level results file. More details are stored in an associated log file)

Function Name - relates to a function in Test Complete.

Data 1-? - It reads along the row until it hits a blank cell. So you can have as much input data as you need. Each Data cell has to start with a prefix. The prefix tells it what the cell contains. "P:" is an input parameter. "E:" is an expected result. "FILE:" - is also an input parameter, but the input is in a file. It follows the path given and loads the text content of the file. "D:" is a dependency - which means check if the stated previous test line passed (or failed) and do (or don't) run this line as a result. "M:" is a marker - which is used when dependency checking (relying on line numbers in the spreadsheet would be bad). You can have as many markers, dependencies, input parameters, and expected results as you want or a function needs.

Similarly, it reads down the spreadsheet until it hits a blank line. This is assumed to be the end of a test. You can add notes easily enough by simply putting a note on a line (usually in a different colour so it stands out) but leaving the run flag blank.

When it completes a sheet, it looks in it's input folder to see if there are more. It works though all the ones it finds until they're done.

I provide the flexible framework. Others populate the spreadsheets for tests (can have many spreadsheets as well). So everything can be switched on or off. Test sheets can be moved in and out of the run folder. Individual tests within a sheet can be switched off independently. So it's fully granular. It also writes results to TFS via the TFS RESTful API. That can also be switched on and off during a run and can refer to multiple projects, suites and tests.

Obviously, this means I also have a TON of validation around my inputs. Have to. To stop bad input data crashing it. It also means I need to provide decent documentation so that the testers know what each function does. What it expects for input. And what it can check as an expected result.

All this is built as script extensions so it's all pretty much built into the IDE now.

Works great. But your error handling has to be VERY robust.