Issues and Feedback about Smart Assertions

Some issues and feedback about Smart Assertions after a day or so of using them. I would like to provide some hopefully helpful feedback on the newly introduced Smart Assertion. Please set the default name generated based on the type selected by the user. e.g. Smart Assertion - Metadata or Smart Assertion - Data The smart assertion does not handle simple text responses in the way that message content assertions do. The following response cannot be parsed by the Smart Assertion. Sure it isn't following good API design practice but it is a valid HTTP response and it is valid JSON data. HTTP/1.1 200 OK Date: Mon, 19 Jul 2021 21:43:03 GMT Content-Type: application/json; charset=utf-8 Content-Length: 29 Connection: keep-alive Server: Kestrel Cache-Control: no-cache Pragma: no-cache Expires: -1 "Your name has been updated." It doesn't seem all that smart to automatically include and enable in the metadata assertions data that is obviously going to change from response to response.Who thought that automatically adding response headers such as Date and Content-Length was a good ides? Date is guaranteed to change with every request. Content-Length is also likely to vary. This is not going to be an enjoyable experience for the novice user of ReadyAPI - "Use our Smart Assertion on your response, make your testing easier, you'll get a fail the moment you re-run the test".3.3KViews5likes10CommentsRamping up your usage of SOAP UI NG ? Check out our Free weekly Training webinar!

Hello all, Have you recently bought new Ready! API licenses and are about to kick start your API tests? Register here for our Free Weekly interactive Webinar, Next one is on the 7th of September( day after Labor day) What to expect? Advice on getting up and running on your SOAP and REST projects Hidden gems within the tool that you may not uncover on your own Personalized question-and-answer with our API experts If you have 10+ users and would like to get your users on-boarded, please reply to this message and I'll be in touch. Cheers, Katleen B Snr. Customer Success Advisor EMEA kbb(at)smartbear.com1.4KViews2likes0CommentsIs it possible to set the test case failed reason that is used in the reports?

This question came up a couple of days ago when going through a TestSuite Report with other team members.Is it possible to set the test case failed reason to a custom message? Currently when a TestCase fails the report simply shows the reason as 'Failing due to failed test step', and I was asked "That's a bit vague, any chance of getting a more descriptivemessage there." This leads to another question, what other reasons are there? I checked back in a few reports and all I ever see is "Failing due to failed test step." I looked through the javadocs and gained no particular insight there. ps. I would love for there to be a "Curiosity" label for questions like these.😼332Views1like2CommentsHow to not crash ReadyAPI on a request with a lot of data, like a general "get all customers" call

Hello, I'm trying to use Smart Assertions in ReadyAPI to easily check for correct data on a response. My problem is that trying to create a Smart Assertion on an endpoint with a response containing a lot of data just straight up leaves ReadyAPI unresponsive and breaks the UI. Is there any way to make Smart Assertions on big general endpoints not completely softlock the application?622Views1like5Comments3.44 issue with java.net.SocketTimeoutException: Read timed out

test step is failing due to "java.net.SocketTimeoutException: Read timed out", we tried increasing the socket timeout but it is still failing after the default 1 minute time. this project and test step is working fine when using 3.41 readyapi version but same test is failing in 3.44 and the latest 3.45 versions. please let us know what need to be done here as this impacting our regression testsSolved1.3KViews1like2CommentsHow to best assert unordered results?

One of the great features of ReadyAPI are the Smart Assertions. They are really useful when dealing with large amounts of data and can be easily adjusted when there are some changes by using "Load from Transactions". This saves a lot of time having to manually add each assertion. However, there a a few issues that make it less than ideal. 1. No Search Facility to quickly find an Assertion. 2. No Facility to add Flows like If and Else or Loops. 3. No Conversion to Script. 4. And especially no facility to deal with unordered list results where the order can be different with each call. 5. Also no Update Feature that allows preserving properties and previous setups. Therefore we unfortunately can't really use this feature with most of our calls. I would like to know if there are others that have similar problems to solve and if there is a recommended way to deal with this apart from writing complex scripts. Data-Driven Tests might be one way.561Views1like3CommentsSwagger compliance assertion with content type different than application/json always fails

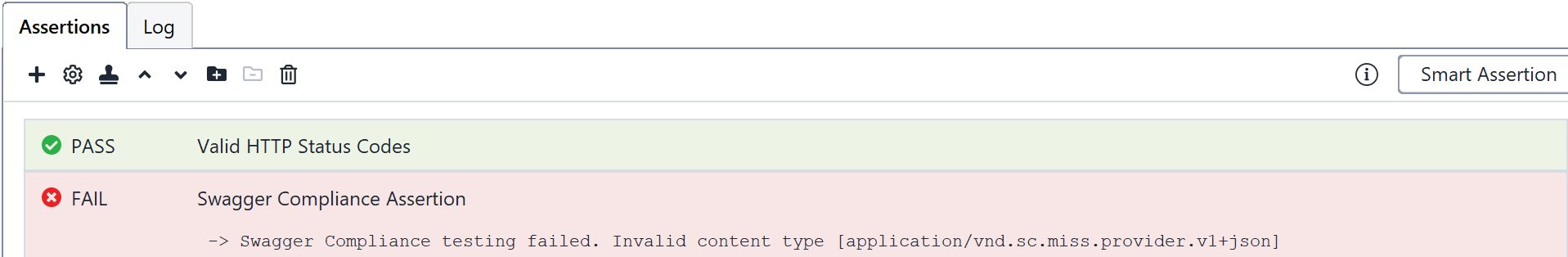

The swagger compliance assertion has a too strict validation on the content type. Currently only the content type application/xml andapplication/json are allowed, otherwise the swagger compliance assertion fails. According toRFC6839 the content-type can contain +json (or +xml) to signify that the document can be interpreted as json (or xml) in addition to some other schema. https://datatracker.ietf.org/doc/html/rfc6839 https://en.wikipedia.org/wiki/Media_type#Suffix I have included the response I received on an API call and a screenshot of the error we receive. Is there a workaround for this such that the swagger compliance can still be used to validate the response in case the content-type is not application/json or application/xml? I tried modifying the response headers inspired by https://support.smartbear.com/readyapi/docs/testing/scripts/samples/modify.html but I wasn't able to do this I added a RequestFilter.afterRequest event with the following code. But editing the content type does not seem possible context.httpResponse.contentType = "application/json;charset=UTF-8" HTTP/1.1 200 OK X-Backside-Transport: OK OK Connection: Keep-Alive Transfer-Encoding: chunked Content-Type: application/vnd.sc.miss.provider.v1+json;charset=UTF-8 Date: Wed, 20 Oct 2021 12:04:05 GMT X-Global-Transaction-ID: 526b1ebd617005ab093d96d3 Cache-Control: no-cache, no-store, max-age=0, must-revalidate Expires: 0 Pragma: no-cache Strict-Transport-Security: max-age=31536000 ; includeSubDomains X-Content-Type-Options: nosniff X-Frame-Options: DENY X-Vcap-Request-Id: d82e27c9-86b0-40f2-7038-1f2102fd6d8f X-Xss-Protection: 1; mode=block X-RateLimit-Limit: name=default,100; X-RateLimit-Remaining: name=default,99;Solved1.3KViews1like1CommentCan ReadyAPI (SoapUI Pro) annotate the Project, Test Suite, Test Case, Step ?

I currently use SoapUI Pro in ReadyAPI 2.8.2 to run many tests against evolving API services. I would like to document changes to various parts of the tests but cannot seem to find a built in annotation function to record changes against the Project, the Test Suite, the Test Case, Steps, Assertions etc. I did not want to pollute the SQL, the Requests etc. with comments but was looking for a consistent annotation function. That allowed for broad explanation at the higher levels and more detailed explanations at the lower levels. At present I use long descriptive Step names, SQL comments, comments in the Requests etc., but this does have it's limitations and is not consistent. A notation feature added to all stages of the testing process would be very useful. Maybe with a date/time stamp. Maybe in the form of a chronological log. Does anyone have any suggestions on how to annotate tests? Thank you.Solved1KViews1like1CommentHow to get passed assertions in Ready API generated reports?

Hi, I have a test case with following test steps Step 1 - RetrieveDataScenario - GroovyScript - To retrieve data records from MySQL DB using my test case name Step 2 - PopulateDataScenario - DataSource - To populate the data log using the records retrieved from RetrieveDataScenario test steps Step 3 - GetRebates - RESTRequest - Using the data row(s) retrieved in DataSource, execute the REST Request and assert if mandatory tags are present (For example - 3 assertions to check if RebateId, RebateAmount, RebateExpirationDate tags are existing in the response. Out of the three assertions RebateExpirationDate tag is missing in response so which the assertion fails and the test step is also failed) Step 4 - IterateTestCase - Repeat the GetRebates REST Request until all data rows are used When I run this test case using the below testRunner command, I see only the failed assertion in GetRebates test step log file. Is there a way to include passed assertions also in this text file? testrunner.bat -sCQ_SMOKE_TEST -cSTANDARD_FINANCE_WITH_REBATES_ONLY -r -a "-fC:\Users\ramus\Documents\Customer Quotes\results" "-RJUnit-Style HTML Report" "-EDefault environment" -I -S "C:\Users\ramus\Documents\Customer Quotes\CQ-Automation-readyapi-project.xml"914Views1like0Comments